Summary:

Banks have poured billions into digital transformation, yet productivity has stuttered as legacy complexity and rising IT overheads keep cost-to-income ratios stubbornly high. AI however, offers a different path: moving from task-focused software to outcome-driven agents that can synthesise knowledge, compress build cycles, and fix fragmented workflows.

The opportunity is real, but so are the constraints. Unlocking agentic AI’s upside demands rethinking how humans and systems share control, rebuilding data and API foundations, and scaling autonomy in measured steps with rigorous human-style QA.

Software ate the world, but did it make it better?

Software’s impact on enterprise productivity, particularly in banking, is a paradox. Over the past two decades, banks have invested heavily in digital transformation. According to Gartner, Global IT spend in financial services exceeded $650 billion in 2023, with big spending on cloud migration, core banking modernization, cybersecurity, and AI initiatives. Yet despite this, productivity in banking has stagnated or declined.

In most developed markets, the cost-to-income ratio for incumbent banks has remained stubbornly high even as digital channel use is now almost ubiquitous. According to Boston Consulting Group, IT spend absorbs 10% of bank revenue, and the complexity of stitching together legacy infrastructure with cloud-native tools has created more overhead, not less.

The promise was that software would eliminate low-value work and unlock productivity. But in banking, it often just relocates that work into a digital maze, especially for incumbents: large multinational banks and credit unions alike.

Generative AI will transform the status quo in financial services

With Large Language Models (LLM), we have both an entirely new way to assemble and manipulate knowledge and a new way to interact with and build software. It’s the combination of both these facets that enable a rethinking of how people interact with computers and potentially solve the productivity paradox:

Generative AI is a shift from pre-programming software that helps us complete tasks to training agents that can deliver the outcomes we want.

Let’s look at how these two facets are combining to shake up every industry that relies on software, including financial services.

An entirely new way to assemble and manipulate knowledge

Since OpenAI launched GPT-2, arguably the first viable and accessible large language model, in 2022, the ability of LLMs to generate coherent, and contextually aware outputs has become clear.

- Generating knowledge, not just retrieving information. These models are not merely predictive text engines; they synthesize information across vast knowledge bases, extract and reframe our ideas, and generate new insights. These models are capable of summarizing complex technical documents, translating ideas across fields, and drawing connections between seemingly disparate topics—effectively operating as knowledge generators rather than just information retrievers.

- Radically reducing software development time. Today developers use tools like GitHub Copilot or Cursor to radically compress development timelines. The role of the software developer has irrevocably changed to the point where productivity without these new tools feels archaic. A 2024 McKinsey report estimated that developers using AI coding assistants can reduce completion time for writing code by 35-45%. GitHub reports that more than 46% of code on its platform is now written with AI assistance. So effective are these coding assistants that Griffin, a UK Banking-As-A-Service platform, reports that its customers can build entire working prototypes while on a 30-minute sales call.

This shift is mirrored across knowledge work. In the UK, roughly half of knowledge workers use an LLM weekly. In financial services, which initially hesitated to allow staff access to GenAI tools, the stance has evolved dramatically. In early 2023, major institutions like JPMorgan Chase and HSBC placed restrictions on LLM usage. By mid-2024, however, these same institutions were actively rolling out internal GPT-based copilots for everything from regulatory compliance documentation to software deployment support.

Banks are cutting deals with foundational model providers, with NatWest and OakNorth announcing partnerships with OpenAI and Lloyds Banking Group announcing an AI deal with Google cloud in June 2025. What was once viewed as a risk is now becoming a competitive necessity.

Unleashing use cases across financial services

Generative AI (GenAI) excels where detailed knowledge already exists, but is scattered across systems and poorly structured. Several high-potential use cases stand out for financial services:

- Customer service. Customer service is a good example of this. From webchat to voice, customer data is often scattered across systems and people. When Gen AI is thoughtfully deployed, the results can be staggering. Look at Monzo’s recent partnership with Gradient Labs, where 90% of queries are resolved by AI with a high quality assurance pass rate.

- Portfolio management. Wealth management similarly grapples with huge volumes of unstructured data to make decisions from onboarding a customer to an advisor assembling insights for a customer's portfolio. BCG estimates that GenAI can yield a 50% reduction in working hours required to review client files, and a 30% saving in advisory resources. Like the software engineer, the role of the financial advisor is rapidly evolving. UBS is using GenAI through its STAAT AI platform to generate pre-meeting briefings that include portfolio and market move summaries for High Net Worth clients.

- Financial advice. Generative AI has the potential to enable disruptive new entrants to offer complex financial advice to a wider audience. Digital wealth managers and digital banks see the opportunity to build propositions that take market share from incumbents. Robinhood’s AI offering, Cortex, will offer a new suite of tools to empower people to make better investing decisions. Cortex can “translate your beliefs about a stock into a specific options trade and strategy” instantly - the same service you would get from a wealth manager.

- Insurance underwriting. AI agents can help underwriters evaluate more risks by removing manual tasks from their workflow, providing real-time cross-checks, and ensuring compliance with regulations. For example, Zurich Insurance Group is using tools like Azure OpenAI to support risk evaluations. Munich Re has piloted agent-led data extraction for underwriting submissions. In April 2025, the Lloyd’s Market Association (LMA) found that 14% of London market firms had already deployed or tested agentic or generative AI in underwriting.

- Insurance claims. AI can automate the extraction and validation of claims data from forms, emails and websites. It can cross-reference claim information with policies and coverage terms, and route claims for approval or escalation based on pre-defined rules. Australia’s QBE has explored autonomous agents in claims triage.

A new language to interact with computers

Even using an LLM in your browser without any customisation, you’ll notice its uncanny ability to take instructions. Through prompting, we ask these foundational models to behave in the ways we want, and dictate how they should classify and present back information to us.

It’s this ability that yields a new type of software - Agentic AI. It’s through this new type of goal-driven software that we have the potential to solve more valuable use cases in the world but specifically in complex systems like financial services.

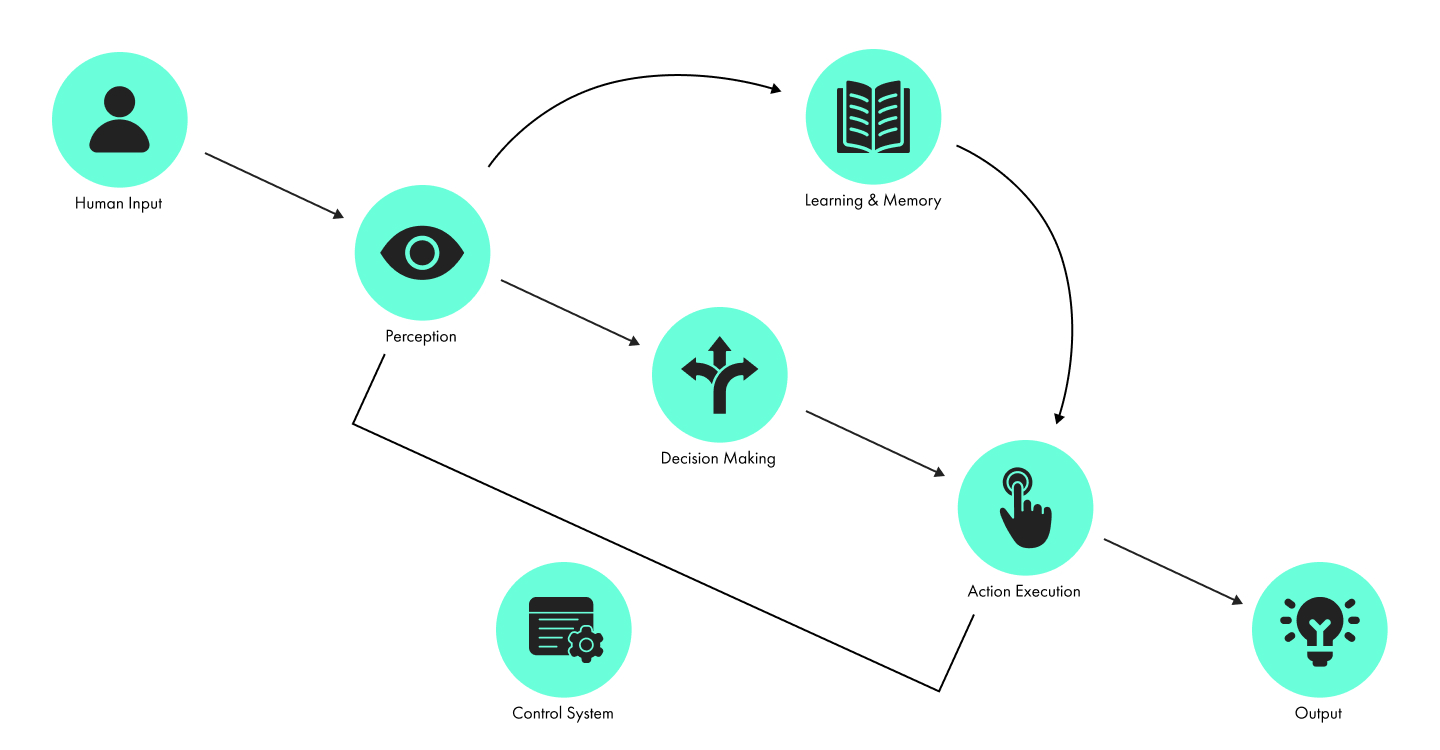

Traditional software systems are rule-bound, following rigid workflows designed by humans. Those workflows can be interoperable but they have to be hard-coded. By contrast, Agentic AI is goal-driven and adaptive, meaning it can interpret objectives expressed by us in natural language, perceive its environment, plan and execute actions, and learn from feedback. AgenticAI mimics a cognitive process (see Figure 1):

1. It can perceive. The agent interfaces with the environment, taking inputs and planning its next steps

2. It can process. The agent processes these inputs through a cognitive loop. Using LLMs or LRMs to understand the user’s underlying goal, and breaking down that goal into sub-tasks (e.g. fetching weather data, reasoning its meaning, comparing thresholds).

3. It can act. The agent produces responses or instructions that invoke specific tools. It can call an Application Programming Interface (API), run a script, or manipulate files to gather information.

Let’s take the example of booking a flight in a corporate setting:

If you work at a large business it’s likely that you’ll need to find a flight on a booking platform and also need to obtain approval for the expense. It’s likely your business will use an expense management tool like Pleo, Spendesk or Sage. Using these tools you can request approval from a manager and generate a single-use virtual payment card for the transaction. Once approval is secured and the payment method is ready, you return to the website to make your bookings.

This process is enabled by a constellation of Software-as-a-Service (SaaS) providers. Even if those SaaS providers individually represent the cutting edge of fintech by utilising single-use cards, approval routing, and real-time spend tracking, humans are still deeply involved in operating the handoffs between each system.

Let’s now reimagine the same use case through the lens of Agentic AI:

1. Rather than interacting with each service manually, the user prompts an AI agent: "Book me a flight from New York to San Francisco on the 24th of April, for under $500."

2. The agent autonomously canvasses the entire internet, all relevant travel booking sites and APIs—to identify the most suitable options that match the given criteria. It compiles the best choices and presents them to the user for selection.

3. Once a choice is made, the agent proceeds to the next phase: payment and approval. Here’s where it gets interesting - instead of using your expense management tool, the agent could directly interface with the relevant stakeholders, like your manager, to obtain approval. It can then request a virtual payment card from a service like Stripe directly, pre-configured with the precise amount needed for the transaction and any relevant usage restrictions.

4. With the payment method in hand, the agent returns to the chosen travel platform and completes the purchase autonomously.

The above example is taken from Stripe’s new Agentic Software Development Kit (SDK) launched in 2025 and is a good example of a fintech company already developing services to enable Agentic AI.

If an agent can process a payment, it can become embedded deep into complex workflows where payments are crucial. It’s perhaps no surprise that both Visa and Mastercard launched their own Agentic payment solutions in 2025.

Reimagining financial services

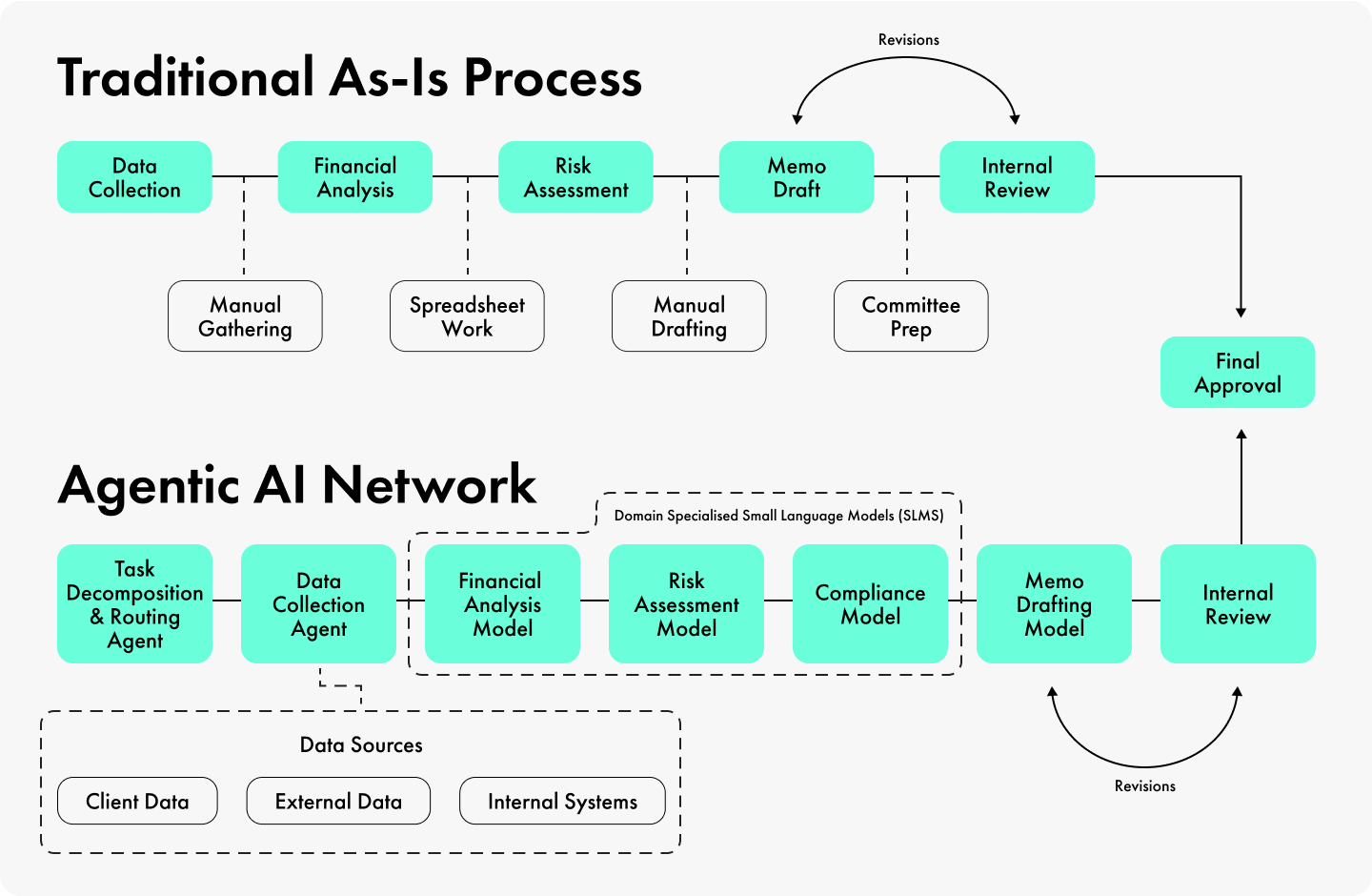

Agentic AI forces a fundamental rethink of how humans participate in workflows, particularly in the back-office. It’s in complex back-office workflows, which require a high degree of parsing unstructured data, where Agentic AI has the greatest potential to make an impact in financial services. Take the example of generating a credit memo in Figure 2:

Each step in the ‘traditional as-is’ process involves multiple people and systems, handing data over from one step to the next. An agentic network processes the inputs and drafts the memo. The operator’s role is now a reviewer and an optimiser, analysing the performance of the agent and fine-tuning its behaviour to produce better results.

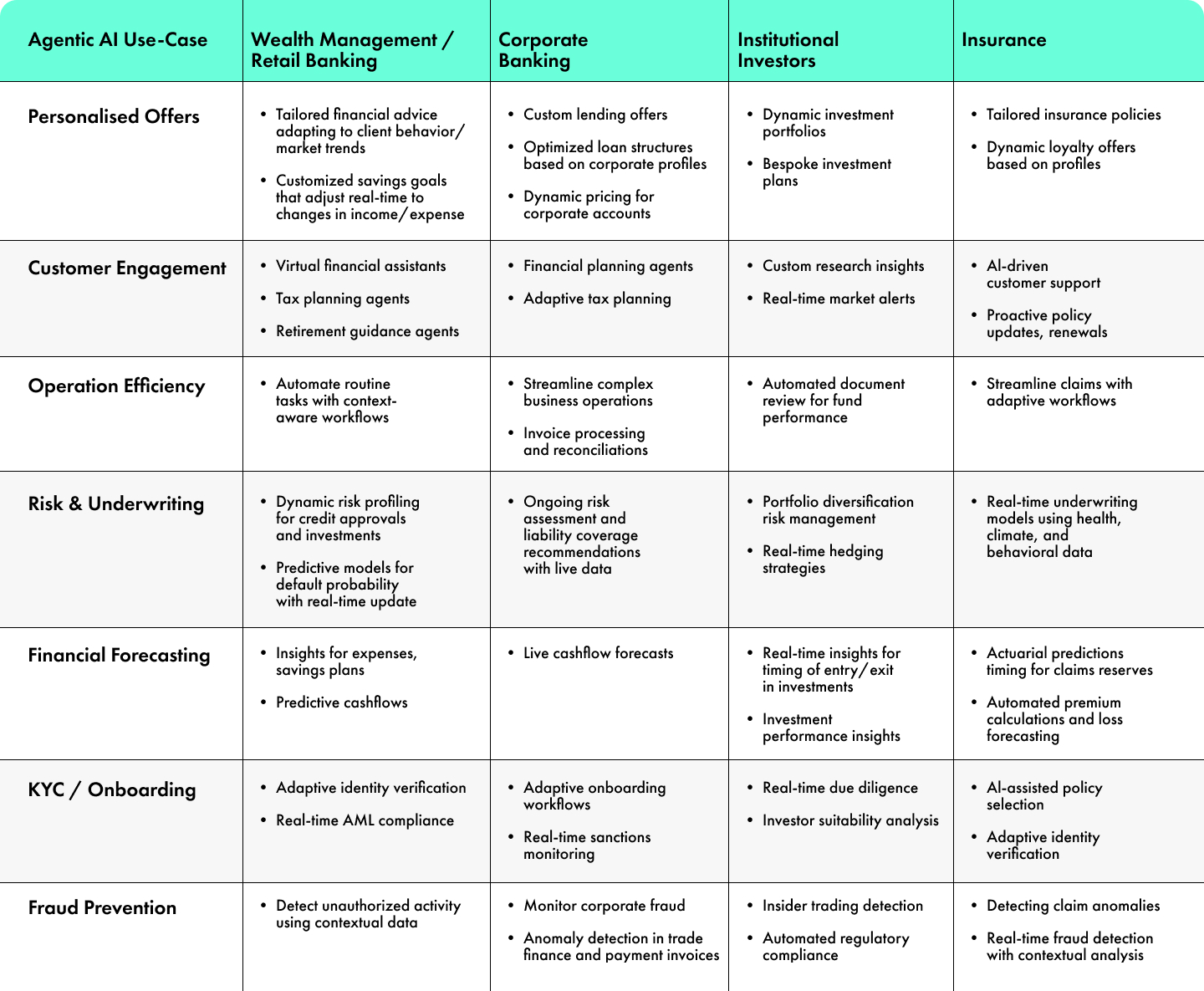

Looking deeper within financial services, institutions grapple with thousands of highly intricate workflows and these workflows often involve extensive data integration, manual reconciliation, and reliance on a large portfolio of SaaS vendors, each fulfilling a narrow slice of functionality. Citibank’s 2025 report on ‘Finance & The Do-It-For-Me Economy’ outlines seven disruptive use cases where AgenticAI can make a big impact across all facets of the financial services industry.

The potential value unlocked from each of these use cases is clear: even taking customer support, a more foundational use of the technology, agents juggle multiple interfaces—chat platforms, knowledge bases, ticketing systems—leading to long resolution times and high churn. A 2023 survey found that bank branch employees use four different systems on average to complete their tasks.

The next frontier: Verticalised, Agentic AI

So far we’ve looked at AI agents completing ‘horizontal’ workflows. The next frontier is to deploy agents that are verticalised, connecting a back-office workflow to the front-office and the customer interaction. In financial services, this could be the end-to-end application for a loan, opening a bank account, or processing an insurance claim.

A good example here is Salient, a startup backed by Y-Combinator. Salient is designed to handle the servicing of an automotive loan end-to-end, bespoke AI agents handle all customer interactions by voice, take payments from customers, process claims, detect non-compliance, and compile reports.

What could enable the proliferation of Agentic AI?

By the end of 2025, there were no scaled use cases of Agentic AI in production at any incumbent bank that we can find, but the acceleration of the technology is phenomenal. Here are three developments to watch:

Agents talking to Agents:

An API allows two systems to talk to each other. For an agent to work across workflows, there must be a standardised mechanism for agents to communicate with each other. Anthropic launched and then quickly opensourced the API equivalent for agents: Model Context Protocol (MCP) in 2023. The MCP helps different AI agents talk to each other by making sure they all understand the same information with a shared memory. For example, when Griffin learned that its customers were building prototypes using its software during a sales call, Griffin quickly built an MCP for its customers' agents to “open accounts, make payments, and analyse historic events” in minutes.

If an MCP takes a ‘horizontal’ approach, enabling agents to handshake agents, Google’s Agent2Agent Protocol (A2A) takes a vertical approach by standardising how an agent reasons and executes within a specific domain. Instead of just connecting agents to each other, A2A defines an orchestration layer where an agent can call specialised sub-agents or tools in a structured hierarchy.

This makes A2A particularly powerful in a financial services context where complex tasks require ‘chaining’ reasoning, verification, and execution in a controlled stack, rather than simply handing data from one system to another.

Open Source vs Closed models:

The landscape has long been dominated by closed-source players like OpenAI’s GPT models or Google’s Gemini. In banking, these closed-models introduce fundamental compliance and risk management challenges for banks. When Deepseek launched R1 in January 2025 as an open source model that significantly outperformed OpenAI’s latest model at a fraction of the cost, it prompted the industry to rethink a more open source future.

The rise of the SLM (small language model):

These are lightweight, highly optimised models that require significantly less compute power than LLMs while still performing well on domain-specific tasks. SLMs could deliver 80%-90% of what an LLM could do at 1/100 of the cost and less environ mental damage.

Scaled adoption will come down to questions of audibility, liability, and scale potential

The practical adoption of AgenticAI in regulated industries such as banking, investment management and insurance is hindered by serious concerns. Chief among these are hallucinations, a lack of auditability, scalability issues, and computing requirements. Let’s look at these in turn:

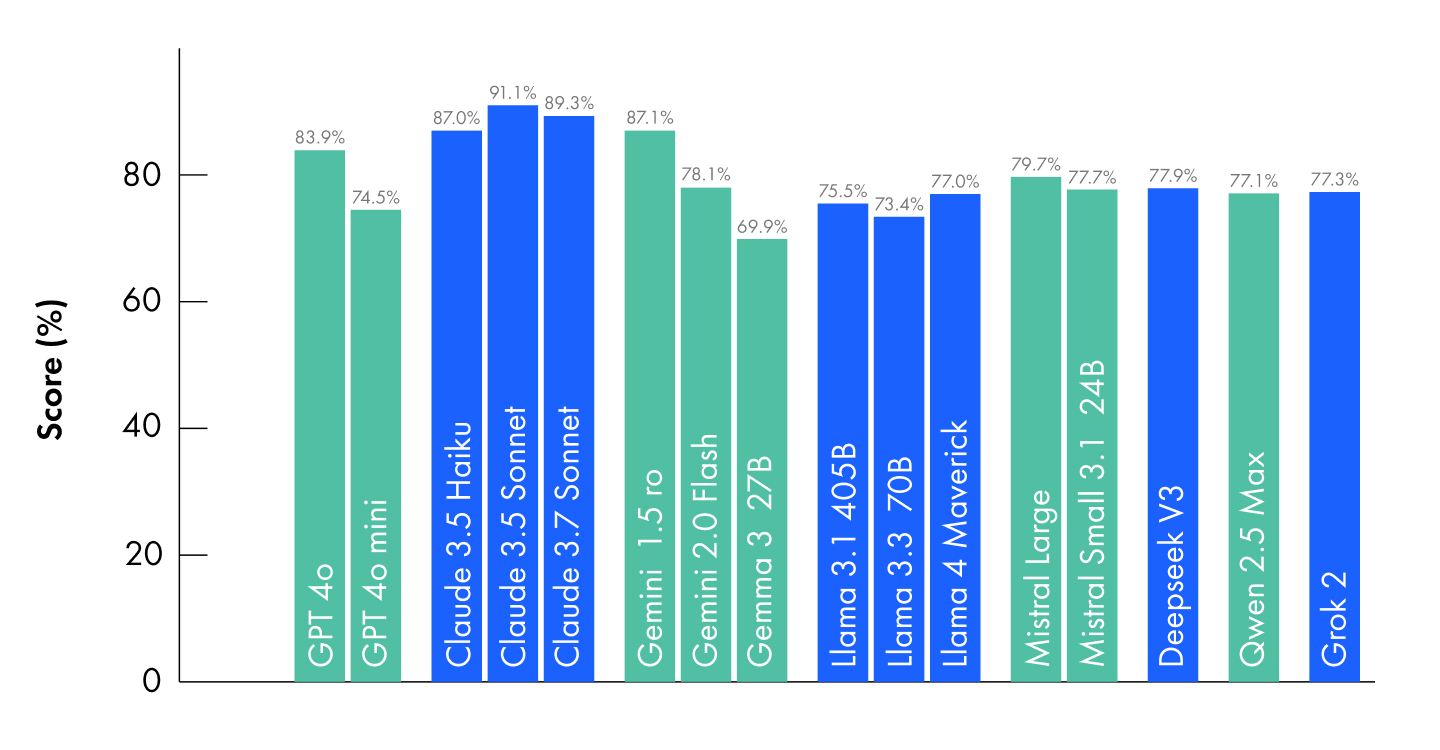

1. Hallucinations: At their most base level, LLMs work by using a huge corpus of identified patterns to suggest what language string most makes sense to come next. This can result in hallucinations: factually incorrect or fabricated information. The pace of development in foundational models means that statistics on hallucinations are constantly changing. A May 2025 report by Hugging Face found that even OpenAI’s GPT-4o, one of the more recent foundational models, only delivers an 84% accuracy rate (Figure 4). So responses to a user’s prompts produce a factually inaccurate response 16% of the time.

2. Auditability: An LLM is a kind of neural network. By design neural networks are not explainable. Much in the same way that we cannot analyse how neurons fire in a human brain to establish the decisions we make, these systems also can’t do this. Where banks have a duty to report and explain the decisions they make, how can we trust our decisions to a system which is unexplainable? A huge amount of research has gone into trying to bring in degrees of explainability into LLM outputs, for example Google’s Gemma Scope which lets us see how an LLM made connections between different words in a query. However, true auditability in the way we’re used to with classical software may simply never be possible.

3. Scalability: What may work for a few thousand customers, definitely doesn’t work at the kind of scale large banks and insurance companies deal with. The most public example of this is Klarna whose CEO announced the layoff of roughly 700 staff to be replaced by AI, only to reverse course and rehire workers once customers complained about the poor quality of customer service. Does this current generation of AI genuinely scale to a population-level audience? The jury’s out.

These issues raise a number of more profound questions. Our system of the world relies on trust in human decision making. Where a human makes a mistake, there is a human-to-human understanding of what caused that mistake and we all live within cultural and legal frameworks which ‘process’ human fallibility.

Where a mistake was caused by genuine error, we forgive. Where error occurs from malice, we prosecute, and so on. How do we treat a robot? An AI agent may currently only be accurate 80% of the time, but are humans more accurate? I suspect not, at least not always.

If an AI Agent can’t explain how its neurons have fired off to arrive at an answer, a human can’t do that either. To what extent do we, as a society, choose to penalise or handicap an AI system because it is not human? What do those thresholds look like?

There are similarly profound questions of risk and liability, particularly relevant for financial services. Who pays when something goes wrong? The banking industry is working to a blank slate - we have no precedents for how badly things can go wrong when an agent makes the wrong payment or gives the wrong decision which harms the customer. What bank is prepared to take that risk?

How should firms think about adopting Agentic AI?

1. Start small and think practically. Banks and insurers should not wait for the perfect framework or approach to come along. It’s still early days for generative AI and the entire ecosystem, from foundational models through to new SaaS vendors, is constantly changing. Pick a valuable but manageable use case such as customer service, fraud checks or data classifying. That first deployment creates a blueprint for how teams in product, risk, and compliance can come together to govern these systems.

2. Make the right foundational investments. Generative AI, like any component of your tech stack, cannot sit in isolation. Unfortunately it’s still a misconception that a use case developed in an isolated environment (like an in-house incubator or innovation lab) can simply scale linearly across an organisation. For Agentic AI to stick and move beyond frontline support (like chatbots) into complex back-office workflows, these agents will need access to a robust data infrastructure with API connectivity and integration into legacy systems.

3. Benchmark on human terms. If we are to deploy GenAI to solve human problems and undertake human tasks then applying typical software evaluation methods just won’t work. Like a human, outputs from a GenAI system are ‘non deterministic’: two identical prompts may yield slightly different but equally valid responses and there are near-infinite paths a customer interaction can take. A mindset shift is needed here. If we are to evaluate the performance of an agent against a human-led process, then we need to put GenAI agents through the same or similar ongoing quality assurance and performance monitoring we do with humans.

4. Design a scaling framework. With benchmark frameworks in place, firms should stage deployment. For example, an agent could start by summarising information for human action, then move to a ‘co-pilot’ mode where the AI drafts responses for humans to approve, starting with limited autonomy in narrow, low-risk domains. Progression could depend on meeting defined measurement gates like error rates staying below a certain threshold, or Net Promoter Score (NPS) at parity with human staff, for example.

5. Set realistic expectations and be patient. Generative and Agentic AI are incredibly impressive technologies, but they are not magic and they are certainly not perfect. It is one thing to reach a 60% or 70% accuracy level; it is quite another to reach 95% or better. So set realistic expectations about the work that will be needed to apply AI to the unique context of your business, train it to an acceptable level of accuracy and manage the risk of mistakes.

This is the first part of our three-part series on the tech behind the future of finance. We'll send you parts two and three directly as soon as they're published.

Tap into award-winning insights and strategies

Who better to help you research, design, and build digital experiences that embrace new technologies than the five-time Consultancy of the Year? That’s right - Accenture, Capgemini, Deloitte, CapCo, and the other so-called big names lost out to “little” 11:FS because we deliver impact - that’s why our clients voted for us.

We’ve helped brands around the world launch winning digital propositions and we’re ready to help you focus your innovation efforts to become more efficient in an industry that demands it more than ever.

But that’s enough talk. Let’s get moving.

.svg)

.svg)